You will need:

- a DB instance to read the schema from

- an Oracle ODBC driver that EA can use

- an ODBC configuration pointing to the DB source schema

- user credentials for the DB source schema

- an EA project to import into

Oracle DB instance

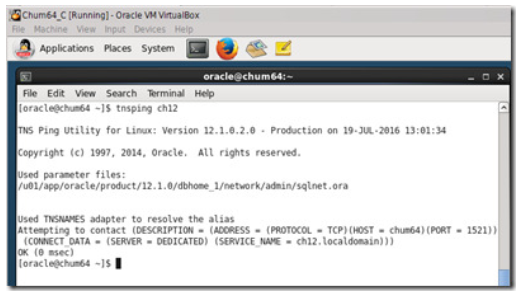

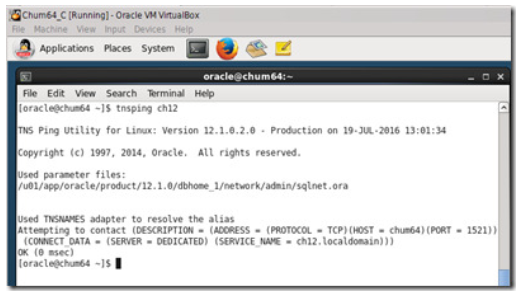

We could point at the Production system, but this is not a great idea, especially when you’re testing. I use a local virtual machine (running Oracle Linux 6.6) with an instance of Oracle Database 12c. This is where I do all my test builds, pulled from the latest branch in source control.

Oracle ODBC Driver

I’m going to assume you’ve got this covered… otherwise, this is a good starting reference:

(We could try using the default Microsoft ODBC Driver for Oracle, but I’ve never got it to work.)

ODBC and Enterprise Architect

My local operating system is 64-bit Windows. However, my installation of Enterprise Architect is 32-bit. This means that it will use the 32-bot ODBC components, and I need to use the 32-bit ODBC Data Source Administrator to configure a data source (DSN).

And if Life weren’t complicated enough:

But, the bottom line is:

On a 64bit machine when you run “ODBC Data Source Administrator” and created an ODBC DSN, actually you are creating an ODBC DSN which can be reachable by 64 bit applications only.

But what if you need to run your 32bit application on a 64 bit machine ? The answer is simple, you’ll need to run the 32bit version of “odbcad32.exe” by running “c:\Windows\SysWOW64\odbcad32.exe” from Start/Run menu and create your ODBC DSN with this tool.

Got that?

- 64-bit ODBC Administrator: c:\windows\System32\odbcad32.exe

- 32-bit ODBC Administrator: c:\windows\SysWOW64\odbcad32.exe

It’s mind-boggling. The UI looks identical too.

Configuring a Data Source (DSN)

- Run the 32-bit ODBC Administrator

- Select User DSN

- Click on “Add…”

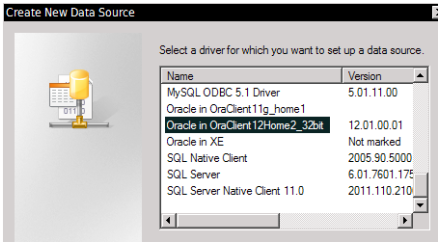

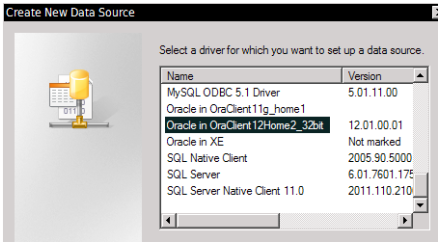

- Select the Oracle driver:

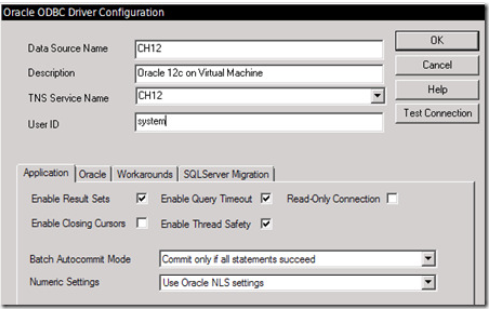

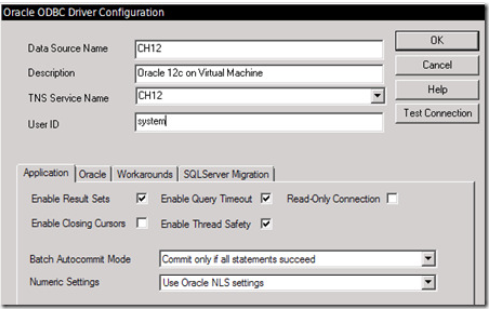

We’re not finished. We need to enter some details:

The only critical parameter here is the TNS Service Name which needs to match whatever you’ve set up in the TNSNAMES.ORA config file, for your target DB instance.

Here, I’ve used a user name of SYSTEM because this is my test Oracle instance. Also, it will allow me to read from any schema hosted by the DB, which means I can use the DSN for any test schema I build on the instance.

Now that the DSN is created, we can move on to working in Enterprise Architect.

Case Study: Importing into a clean project

Note: I’m using images from a Company-Internal How-To guide that I authored. I feel the need to mask out some of the details. Alas I am not in a position to re-create the images from scratch. I debated omitting the images entirely but that might get confusing.

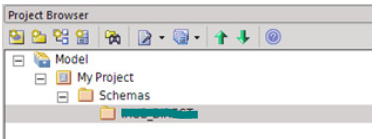

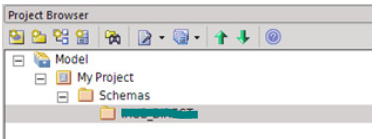

In Enterprise Architect, we have a clean, empty project, and we didn’t use Wizards to create template objects. It is really just a simple folder hierarchy:

Right-click on the folder and select from the cascading drop-down menu of options:

- Code Engineering > Import DB schema from ODBC

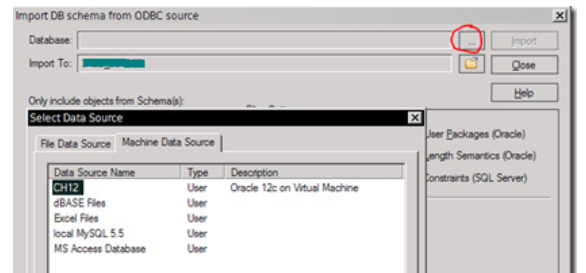

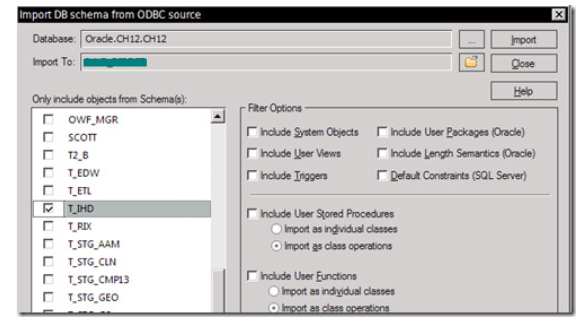

This will bring up the Import DB schema from ODBC source dialog.

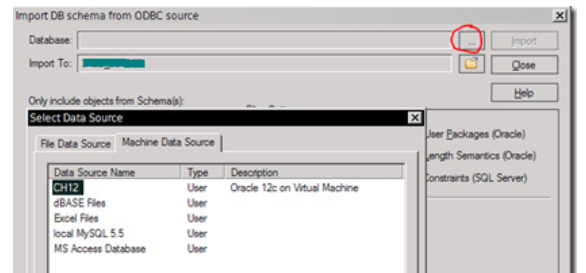

Click on the chooser button on the right side of the “Database” field to bring up the ODBC Data Source chooser:

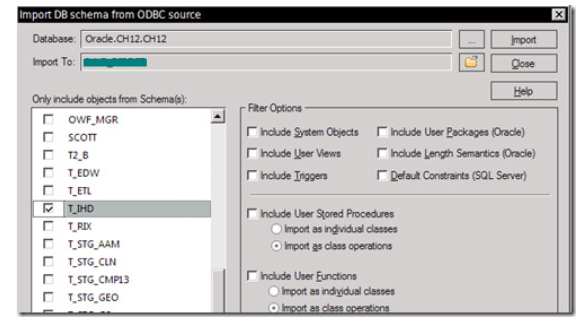

The DSN we created should be available under the Machine Data Source tab. Select it, and click OK. We should be prompted to enter a password for our pre-entered User Name, after which Enterprise Architect will show us all the schemas to which we have access on the DB:

Previously, on this instance, I ran the database build scripts and created a set of test schemas using the T_ name prefix.

We’re going to import the contents of the T_IHD schema into our project, so we check the “T_IHD” schema name.

Our intention is to import (create) elements under the package folder, for each table in the T_IHD schema.

Review the filter options carefully!

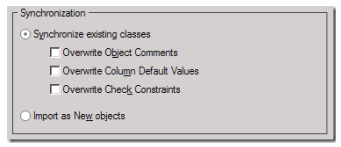

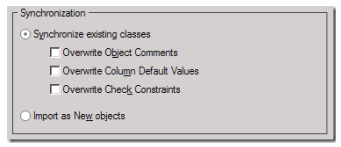

The default settings are probably correct, if we are only interested in creating elements for each table. Note the Synchronization options:

The package folder is empty, so you might think that we need to change this to “Import as New objects”. Don’t worry. New objects will be created if they don’t already exist.

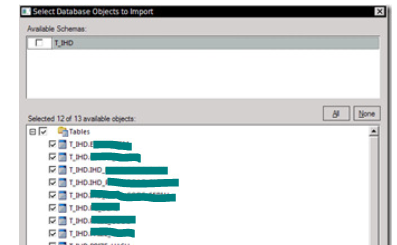

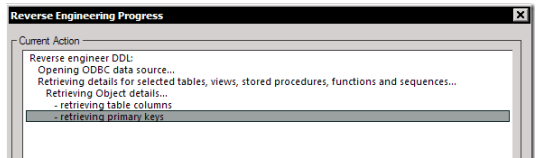

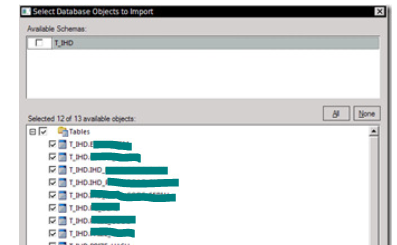

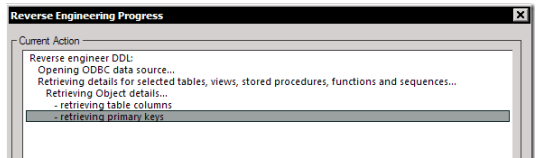

Now click on “Import” button at the top right of the dialog. After a sort wait, during which EA is retrieving metadata from the database, the contents of the T_IHD schema will be presented to us in the “Select Database Objects to Import” dialog:

We are only interested in the tables, so check the [x] Tables checkbox and all the contained tables will be selected.

Are there objects we don’t need to import?

Often there are objects we’re not interested in including in the Data Model. For example, the TEMP_EVENT table might only be used as an interim location for data, during some business process, and not worth complicating the model with.

We can clear the checkbox next to the TEMP_EVENT table name to skip it.

Now, having validated our selection, we can press the OK button to start the import.

The process may take some time… when it completes, we can close the Import dialog, or select another schema and destination folder and import a different schema.

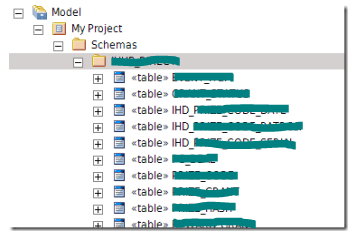

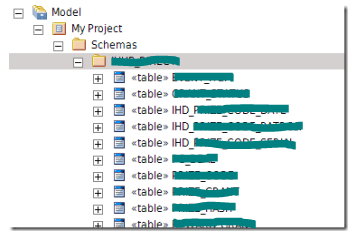

The table elements should now be visible in the Project hierarchy:

Case Study: Refreshing a schema

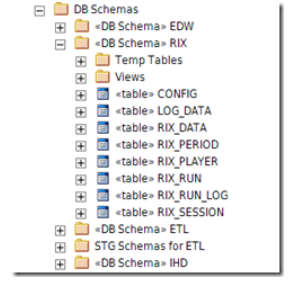

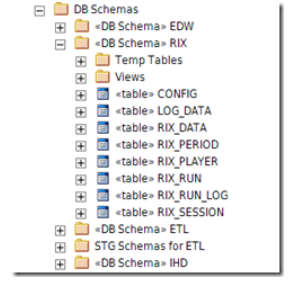

We need to refresh the RIX schema tables in the model. This schema is already in the model, with lots of additional element and attribute-specific notes that we need to retain.

Two important things to note:

- The schema contents are organized differently (temp tables were imported into the model but then moved into a sub-folder);

- There are some minor structure changes to columns, in the source database

We need to refresh the schema in the model, with the new structure from the DB. The process to follow is almost exactly the same as the clean import described earlier:

Select the package folder

- Right Click > Code Engineering > Import the DB schema

- select the T_RIX schema

- Make sure the Synchronization options are “(o) Synchronize existing classes” and “[ ] Overwrite object comments”. We do not want to remove any existing notes entered against elements and attributes in the model.

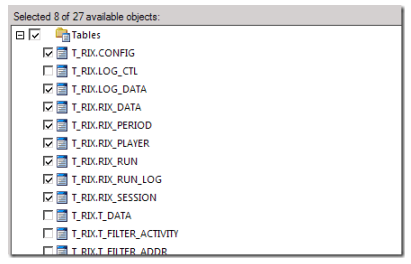

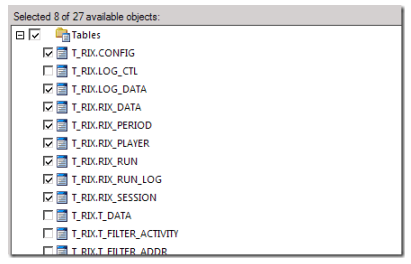

Now, in this example we are only interested in refreshing those tables that are already in the model, in the current folder. That represents a subset of the total set of tables that are in the current DB schema:

In the image above, LOG_CTL is a table we don’t care about (not in the model); and the T_* tables are temporary tables that, in the model, are in a different folder. We’ll do those next (see below).

After the import process is completed, we should be able to drill down and see the schema changes reflected in the updated model.

We can repeat the process for the Temp Tables sub-package, this time selecting only the T_* temporary tables in the import selection.

Summary:

- Table objects in the model might be moved around into different folders, per type, for documentation purposes.

- The schema import process wants to import all selected tables into the current folder

- Therefore, if you have separated out tables in a single schema, you should refresh them on a folder-by-folder basis, selecting only the objects you want to be in each folder in the model.

With careful set-up, it is possible to import and refresh table structures from database schemas into a project in Enterprise Architect, without over-writing existing documentation and attribute notes.

Recent Comments